AMD Unveiled the 'MI300x' Chip Optimized for AI Workloads

Colin Smith — June 15, 2023 — Tech

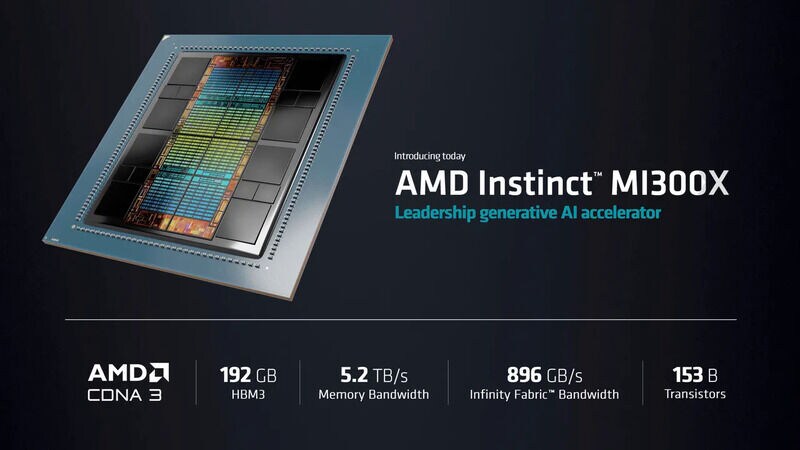

The Instinct MI300X is AMD’s latest and most advanced AI chip, designed to handle large language models and generative AI applications. The chip is part of the CDNA 3 architecture and consists of multiple GPU chiplets interconnected by shared memory and networking links. The MI300X can run a 40 billion parameter model called Falcon directly in memory, eliminating the need for data transfers to external memory. The chip also boasts a high memory bandwidth of 5.2 terabytes per second and a large memory capacity of 192 gigabytes.

The MI300X is expected to start shipping to some customers later this year, according to AMD CEO Lisa Su. The chip is seen as a strong competitor to Nvidia’s H100 GPU, which currently dominates the AI market. AMD claims that the MI300X offers 2.4 times the memory density and 1.6 times the memory bandwidth of Nvidia’s H100. The price of the MI300X has not been disclosed yet, bit its competitors cost close to $30,000 USD. The MI300X is set to revolutionize AI computation and enable larger and more complex AI models in the future.

Image Credit: AMD

The MI300X is expected to start shipping to some customers later this year, according to AMD CEO Lisa Su. The chip is seen as a strong competitor to Nvidia’s H100 GPU, which currently dominates the AI market. AMD claims that the MI300X offers 2.4 times the memory density and 1.6 times the memory bandwidth of Nvidia’s H100. The price of the MI300X has not been disclosed yet, bit its competitors cost close to $30,000 USD. The MI300X is set to revolutionize AI computation and enable larger and more complex AI models in the future.

Image Credit: AMD

Trend Themes

1. AI Chip Advancements - The MI300X chip from AMD offers improved memory density and bandwidth, revolutionizing AI computation.

2. Large Language Models - The MI300X chip is optimized to handle large language models and generative AI applications, opening opportunities for natural language processing advancements.

3. In-memory Computing - The MI300X chip's ability to run large models directly in memory eliminates the need for data transfers, paving the way for faster and more efficient processing in AI workloads.

Industry Implications

1. Artificial Intelligence - The AI industry can benefit from the MI300X chip's improved performance, enabling the development of more advanced and complex AI applications.

2. Semiconductor - The semiconductor industry will see opportunities in producing advanced chips like the MI300X, catering to the growing demand for powerful AI computing solutions.

3. Natural Language Processing - The natural language processing industry can leverage the MI300X chip's capabilities to process larger language models, driving advancements in machine translation and text generation.

5.8

Score

Popularity

Activity

Freshness