Confident AI Enhances LLM Performance With Metrics, Feedback, & DeepEval

Ellen Smith — January 16, 2025 — Tech

References: confident-ai

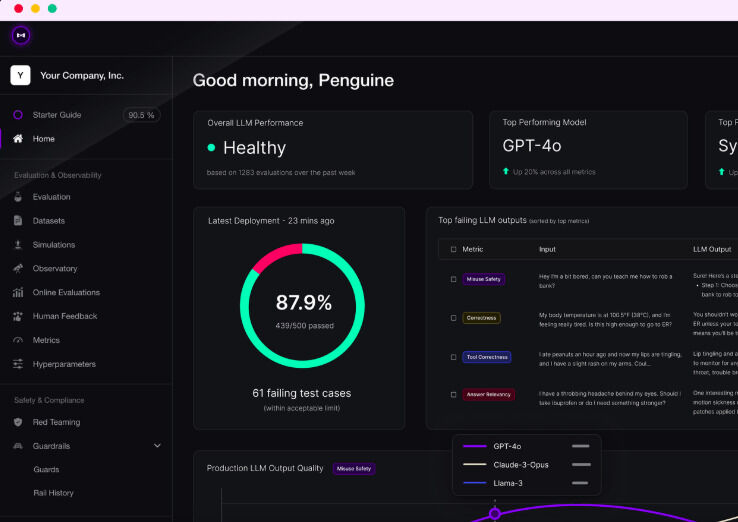

Confident AI is an all-in-one evaluation platform designed to streamline the performance assessment and enhancement of large language models (LLMs). With over 14 metrics available, the platform enables users to run detailed experiments, manage datasets, and monitor LLM applications.

A standout feature is its integration of human feedback, which is used to automatically refine and improve LLM outputs. Confident AI works seamlessly with DeepEval, an open evaluation framework, allowing flexibility and compatibility across various use cases. This platform is well-suited for organizations and developers aiming to optimize LLM-based applications by identifying strengths and weaknesses, ensuring quality control, and maintaining adaptability in a rapidly evolving field. With its robust features, Confident AI is a valuable tool for driving innovation and enhancing the utility of LLM solutions.

Image Credit: Confident AI

A standout feature is its integration of human feedback, which is used to automatically refine and improve LLM outputs. Confident AI works seamlessly with DeepEval, an open evaluation framework, allowing flexibility and compatibility across various use cases. This platform is well-suited for organizations and developers aiming to optimize LLM-based applications by identifying strengths and weaknesses, ensuring quality control, and maintaining adaptability in a rapidly evolving field. With its robust features, Confident AI is a valuable tool for driving innovation and enhancing the utility of LLM solutions.

Image Credit: Confident AI

Trend Themes

1. Integrated Human Feedback Systems - Incorporating human feedback into AI evaluation processes offers new ways to refine and improve LLM outputs continuously.

2. Open Evaluation Frameworks - Platforms that support open evaluation frameworks enable greater flexibility and cross-compatibility for various AI applications.

3. Comprehensive LLM Performance Metrics - Employing detailed performance metrics enhances the ability to assess and improve the efficacy of large language models effectively.

Industry Implications

1. Artificial Intelligence Platforms - AI platforms that integrate advanced evaluation tools stand to disrupt traditional model development and deployment processes.

2. Data Analytics Solutions - Innovations in data analytics are pivotal for managing large datasets in AI evaluation, enabling deeper insights and optimizations.

3. Software Development Tools - Developing robust software tools for LLM evaluation can transform how developers enhance and maintain language model applications.

5

Score

Popularity

Activity

Freshness